In recent years, we’ve grown accustomed to chatting with AI models like GPT, capable of understanding and generating human language with impressive fluency. But what if we could teach GPT to “speak” the quantum language? Our nature, at its fundamental level, is governed by quantum physics. When we probe quantum systems through measurements, we receive responses in the form of measurement outcomes—the messages from nature about its quantum behavior. These are the quantum language that nature speaks to us. Can we teach AI to understand this quantum language, i.e., training it to predict the outcomes of quantum experiments as if it were a quantum system itself?

In our recent work, we propose an innovative approach: ShadowGPT (arXiv:2411.03285), which enables a GPT language model to generate classical shadows—a specific kind of quantum language that nature used to talk about the quantum world through random measurements. This innovative combination of AI and quantum physics unlocks new ways of understanding complex quantum systems.

Our research centers on a longstanding problem in physics—solving quantum many-body problems. Given a quantum system’s parameters, we want to predict its ground state properties, which tell us how it behaves in its lowest energy state. This challenge is not just a mathematical puzzle; it is key to understanding quantum materials, designing error-resilient quantum computers, and developing quantum teleportation technologies. However, traditional numerical methods for classical computers to simulate quantum many-body systems fall short, because representing a quantum many-body state classically is exponentially complex in general, overwhelming even the most powerful classical computers.

Quantum computing offers a promising alternative, based on the power of quantum state preparation and randomized measurement. By preparing a quantum state (the “input” to a quantum device), carrying out quantum operations physically, and then performing randomized measurements (the “output” back to the classical world), we can collect data from quantum systems through real experiments. This data reveals the behavior of quantum systems, demonstrating to us how nature solves quantum many-body problems. However, running quantum experiments remains costly and resource-intensive, making this quantum data highly valuable.

So, why not use this data to train a machine learning model, enabling it to predict quantum behaviors without the need for future repetition of costly experiments? This is where generative AI steps in—our approach aims to teach a GPT model to learn from quantum data, effectively allowing it to simulate the quantum device. In other words, we’re training GPT to speak the quantum language like a quantum device.

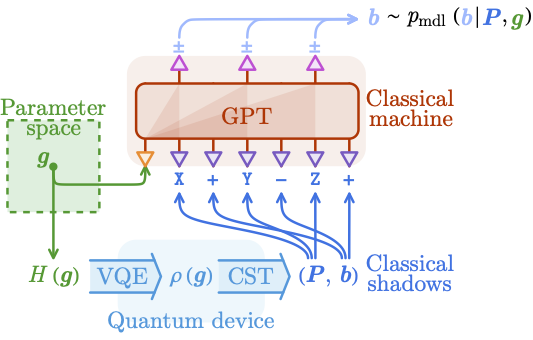

Our innovation, ShadowGPT, combines two powerful techniques: classical shadow tomography and generative pretrained transformer (GPT) models. Classical shadow tomography uses randomized measurements to generate “classical shadows”—an efficient, classical representation of a quantum state that allows us to systematically estimate various properties of the quantum state just by simple data statistics. Each classical shadow is essentially a record of randomized measurements, where each qubit is measured independently under a randomly chosen Pauli observable (X, Y, or Z) with binary measurement outcomes (+ or −). This information can be organized into a sequence of tokens, perfectly structured for a language model to process. The quantum information within the underlying state is fully encoded in the intricate correlations between these measurement outcomes, across different collections of qubits and different combination of observables, which can be decoded by a mathematical formula.

Imagine we have access to a quantum device that can prepare the ground state of a Hamiltonian with specific coupling parameters and perform classical shadow tomography measurements on it. By running such quantum experiments, we would collect a set of data that directly links the Hamiltonian parameters to the classical shadows of its ground state. For this study, we use simulated data to demonstrate the idea. We train a GPT model on classical shadow data for a range of quantum Hamiltonians, teaching it to generate the ground state’s classical shadows in response to the Hamiltonian parameters—much like ChatGPT responds to a prompt. Simply put, we are enabling ShadowGPT to predict quantum properties by learning directly from quantum experimental data, bypassing the need for first-principle numerical simulations from scratch.

To test ShadowGPT, we applied it to two well-known models in quantum physics: the transverse-field Ising model and the \(\mathbb{Z}_2 \times \mathbb{Z}_2\) cluster-Ising model. Even with a limited training dataset, ShadowGPT was able to interpolate across the parameter space, accurately predicting properties of interest—such as ground state energy, correlation functions, string operators, and entanglement entropy—for new Hamiltonians outside the training dataset. This breakthrough shows that AI can serve as a scalable, powerful tool for exploring and understanding complex quantum systems.

Looking forward, the potential for data-driven quantum simulations is immense. As quantum computing technology progresses, so too will our ability to collect richer datasets from quantum experiments. AI models like ShadowGPT can harness this data, potentially becoming powerful tools for researchers to explore and predict quantum phenomena at scales previously out of reach. In the future, we might even see a new era of Large Language Models for Quantum, where machine learning models not only assist in speeding up quantum simulations but also open new frontiers in our understanding of the quantum world.

(Written by OpenAI ChatGPT 4o)